Why Good Systems Go Bad: How Tech Debt Grows One Decision at a Time

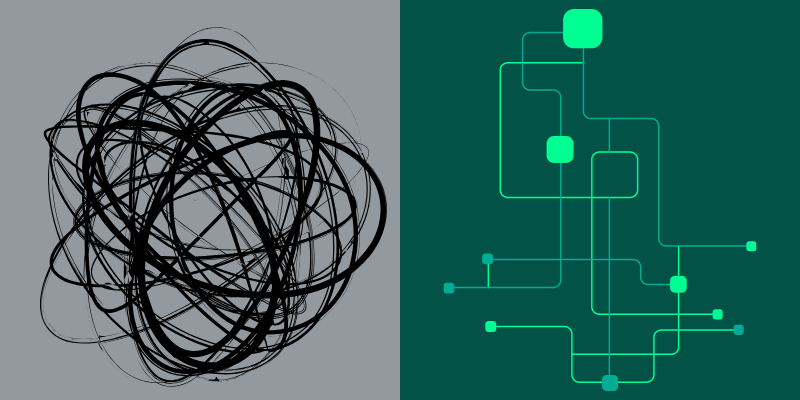

No one plans to build an outdated system. Yet many talented teams end up there. It’s often hard to pinpoint exactly when and where the breakdown occurred, because the reality is that it happens one bad choice at a time.

The path to a healthy platform and the path to a brittle one look almost identical in the moment. The difference is how you handle change, risk, and commitments over time.

The Death March Toward Outdated Systems

As organizations grow, change just gets harder. Early on, you can ship a database change in a week because the blast radius is small. Later, the same change touches more users, more services, and more controls. Compliance also adds complications, and security frameworks like SOC 2, HIPAA, and PCI require more discipline. These frameworks are needed for security and trust, but they also mean that quick and spontaneous moves are no longer an option.

Even the right decisions age. Regulations change, and the people who built the first version move on. Vendors change roadmaps. A solution that fit perfectly in year one can become a problem in year three. That’s not a failure. It’s the natural result of progress. The risk comes when teams don’t revisit past choices, and one day find themselves dealing with—surprise!—a significantly outdated system.

The Cost of Small Deferrals

Bad decisions add up over time, and I learned this the hard way at a previous company. We deferred a small change to a startup script required for an operating system upgrade. It looked like a week’s work, and we were already very busy with our normal workload, so we pushed it off. Two-and-a-half years later, that single deferral came back and caused massive challenges. We could not upgrade other systems until we went back and fixed what we should have done years ago. By skipping a week’s worth of work, we blocked an OS upgrade that prevented us from adding a crucial scaling update. That scaling update would have prevented at least five customer-visible outages in those two years.

My lesson learned? Small delays compound. Every “we’ll get to that later” adds friction to future work. Upgrades aren’t glamorous, but they’re important. Don’t wait to make the upgrade.

Problems with Vendor Commitment and Customizations

Vendor choices also create commitments. Contracts shape what you can change for a period of time. When a vendor is missing a feature, you build a workaround. When they later ship that feature, you face a new decision: unwind your patch or keep both paths. Either answer can be right, but avoiding the choice lets debt quietly grow.

Customization is another trap. Early deals can nudge you to build one-off features for a single customer. Do it a few times and you’re maintaining parallel versions of the product, parallel test suites, and slower releases. The product drifts away from a clean and configurable core.

Lessons from the AWS Outage

The AWS outage in October 2025 was a reminder that even the most reliable systems fail. It also showed how easy it is for companies to respond the wrong way. When an event like this happens, the instinct is to overcorrect to spin up multi-cloud projects or build redundant systems everywhere. But unfortunately, that reaction often creates more complexity and risk than it prevents.

The smarter approach is measured and driven by data. Start by understanding what the outage actually exposed. Was it an architectural dependency, a monitoring gap, or a communication failure? Fix what’s actionable first. Add a clear status page or localized redundancy for the most critical workloads, then take time to analyze what really needs to change.

No system is immune to failure. Outages are inevitable, but chaos doesn’t have to be. The difference is how you respond.

Those who can respond calmly, incrementally, and with a focus on making the next outage less disruptive than the last will ultimately have a leg up.

Avoiding the Emotional Side of Tech Debt

Technology decisions are often made emotionally, even when they feel purely logical. Teams become attached to the systems they built, the vendors they trust, or the solutions that once worked well. That attachment can make it difficult to step back and see when something no longer fits.

And there’s also the sunk cost fallacy—the belief that because time or money has been invested, continuing down the same path is the only reasonable choice. In truth, both emotional attachment and sunk cost fallacy can keep organizations from making the right change at the right time. The goal is to evaluate decisions based on present value, not past effort, and move forward when it makes sense. Even if it means quitting an earlier decision.

When Quitting Makes Sense

In our society, quitting is often seen as failure. But in my world, quitting at the right moment is a strength. It frees you to choose the better option today, not later when the damage has already been done. Change isn’t an indictment of previous work—it’s how resilient systems stay resilient.

My thoughts on quitting have been shaped by Annie Duke’s book Quit: The Power of Knowing When to Walk Away. It reinforced for me that quitting at the right moment is a strategic decision, not a setback. I recommend it often to friends, family, and colleagues both inside and outside of tech.

How Elevate Stays Ahead

At Elevate, we design for replaceability. Our services sit behind clear interfaces, letting us run old and new systems in parallel and cut over with confidence. Earlier this year, we executed a zero-downtime database upgrade after weeks of preparation and careful dry runs. It took two attempts, and most importantly, the customers never noticed. There’s a metaphor in the tech world that depicts what we pulled off so successfully: we changed the wheels while we were driving.

We also document accepted risks and workarounds, and we diligently schedule when to revisit them. When the context changes, we reevaluate.

For example, we use Temporal’s cloud offering for a backend workflow processing rather than building it ourselves, because these types of systems are hard to design, debug, deploy, and operate. By making that commitment, we freed up our engineers to focus on larger needs, but we also committed to crafting our workflows around Temporal and its features (and working around any bugs/defects/lacks). If, in the future, we decide to replace Temporal, we will incur a certain level of tech debt due to how we use it today. We will stay on top of this integration, and we will make the hard decision when it's time.

These are the types of decisions I deal with every day, and in that respect, I’m no different from any other senior engineer. Every decision today has a lifespan, and the trick we pull off is spreading them out so they don’t all die at once.

In it for the Long Haul

Staying current isn’t glamorous. It’s deliberate, and it takes regular planning, upgrades, documentation, and steady work. You may not get credit for it in the short term, and most people’s eyes may glaze over when you talk about it, but you’ll feel it in long-term stability, security, and delivery speed.

Here’s my advice in a nutshell:

- Plan for ongoing renewal, not permanence. Build so parts can be replaced.

- When a decision stops serving you, change it and keep going. Don’t be afraid to quit.

- Avoid runaway tech debt caused by customizations, workarounds, and vendor commitment.

- Make the hard decisions early. They only get harder with time.

About the Author

Paul Trout is a specialist in IT infrastructure, cloud architecture, and enterprise systems, with extensive experience leading cloud migrations, AWS security and governance, network architecture, and high-volume messaging platforms. Throughout his career, he has designed and implemented scalable, secure infrastructure solutions for industries ranging from fintech to Wall Street investment banking. Paul’s leadership combines technical expertise with strategic planning, establishing him as a trusted architect for modern, cloud-based environments.

Connect with Paul and follow Elevate on LinkedIn for more great info.